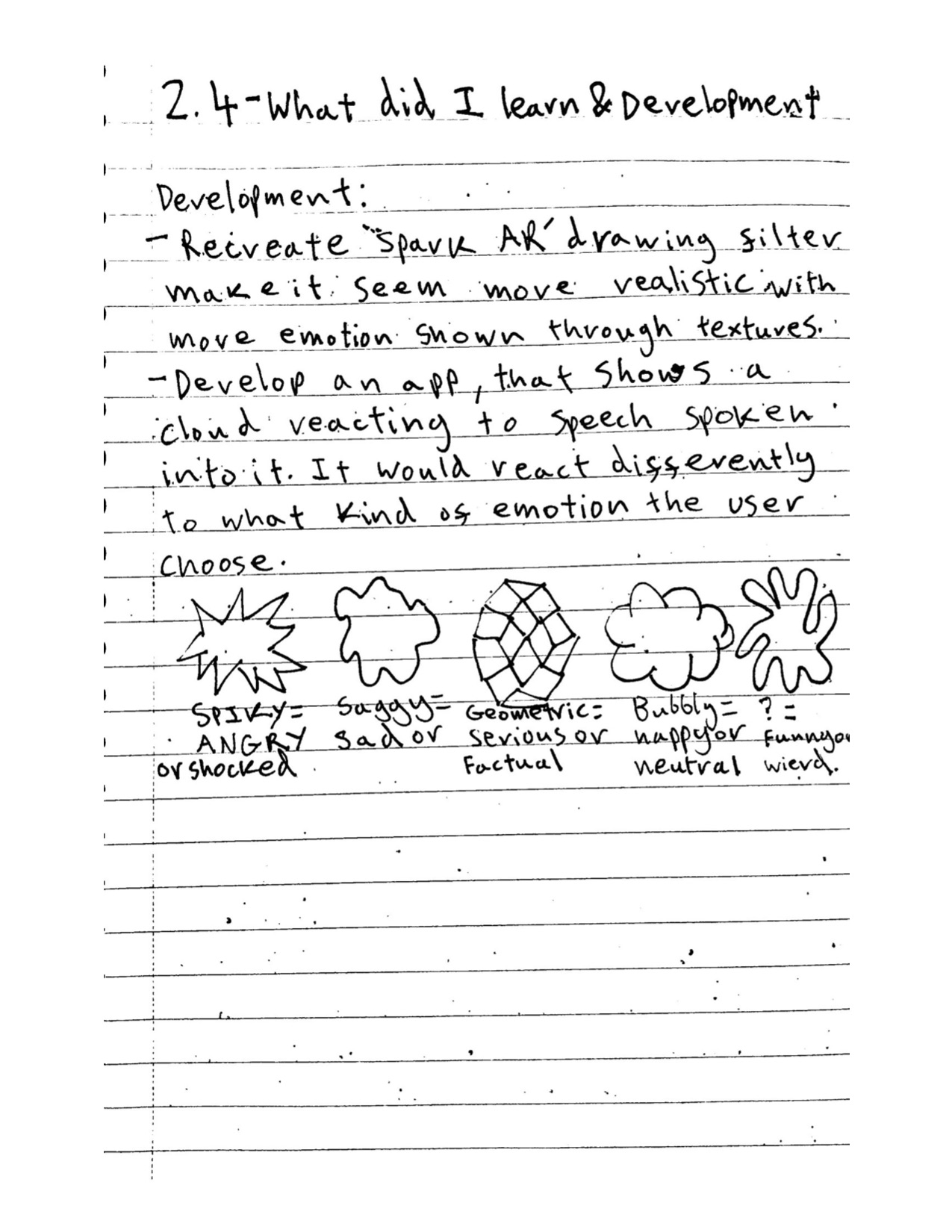

This is my development for my project 2.5. My first idea to develop this project was to create something that detected the weather and extrapolated the data into a certain texture or colour to place onto a cloud. Which the user could then use to show other people what kind of mood they were in reflected by the the wheather at the time. However I didn’t feel that this felt personalized or individual enough So my second idea was to develop how the clouds would react when the user spoke into it to show what kind of emotion the user felt. To do this I wanted to show the emotion of the user through the tone of their voice portaited by the colour the cloud would turn. For example if someone was angry the cloud would turn red by detecting the volume and frequency of the voice.

I thought Xcode would be perfect to use as you could use the materials in the app quite eaisly and through the code you could get these materials to react to the volume input by the user. To do this I used an extension form GitHub called audio kit which could detect sound and make objects or stuff within the app react. In the Code I was able to make the cloud pulse when the person spoke louder or change colour when the frequency of the input was higher.

As you can see in the video the cloud is changing colour or pulsing when I click my fingers around the object. I believe this was an effective outcome because it allows the user to veiw their emotion through the transformation of the cloud and present it to other user that would walk past it and see the audio within the cloud and understand what kind of emotion or serverity of the commentry made in that specific place.